Data Science Key Products

Qlik AutoML is a feature within the Qlik Sense analytics platform that aims to automate the process of creating machine learning models for data analysis. Here are some pros and cons of using Qlik AutoML:

Pros:- Ease of Use: Qlik AutoML is designed to simplify the machine learning process for business users and analysts without extensive data science expertise. It automates the selection of algorithms, hyperparameter tuning, and model evaluation.

- Integration with Qlik Sense: Qlik AutoML seamlessly integrates with the Qlik Sense analytics platform, enabling users to perform data preprocessing, visualization, and analysis alongside automated machine learning.

- Time Efficiency: Automating the machine learning process saves time, allowing users to quickly generate models and insights from their data without manually experimenting with different algorithms and parameters.

- Algorithm Selection: Qlik AutoML automatically selects appropriate algorithms based on the nature of the data and the problem type, optimizing model performance.

- Reduced Technical Barrier: With Qlik AutoML, users don’t need in-depth knowledge of machine learning algorithms, reducing the technical barrier to using advanced analytics.

- Visualization of Results: The results of the automated machine learning process, including model accuracy and performance metrics, are visualized within the Qlik Sense platform.

- Quick Prototyping: Qlik AutoML is useful for rapid prototyping and exploring the potential insights hidden in the data.

- Limited Customization: While Qlik AutoML streamlines the model-building process, it might lack the flexibility and customization options that data scientists need for complex tasks.

- Algorithm Limitations: Qlik AutoML focuses on predefined algorithms and might not cover all possible algorithms or cater to highly specialized tasks.

- Data Quality Dependencies: The effectiveness of Qlik AutoML depends on the quality and structure of the input data. Poor data quality can lead to suboptimal results.

- Limited Control Over Hyperparameters: While AutoML optimizes hyperparameters automatically, users might have limited control over specific parameter settings.

- Model Interpretability: Automated models might lack the interpretability and explanations that data scientists can provide through manual model creation.

- Complex Tasks: For more complex machine learning tasks or unique problem domains, Qlik AutoML might not provide the necessary customization and flexibility.

- Dependency on Platform: Qlik AutoML is only available within the Qlik Sense platform, which might limit its use for users who prefer other analytics or machine learning tools.

Looker is a powerful business intelligence and data visualization platform that empowers organizations to explore and analyze their data. H2O.ai is the leading AI cloud company, on a mission to democratize AI for everyone. Customers use the H2O AI Cloud platform to rapidly make, operate and innovate to solve complex business problems and accelerate the discovery of new ideas.

With Looker, users can:

- Create interactive and dynamic dashboards.

- Run ad-hoc queries to gain insights.

- Collaborate on data analysis and visualizations.

Its user-friendly interface and robust features make it a preferred choice for data-driven decision-making.

Learn more about Looker

Google Colab is a cloud-based platform that provides a Jupyter Notebook environment for writing and executing code, particularly in Python. It’s commonly used for data analysis, machine learning, and collaborative coding. Here’s how you can use Google Colab for collaboration and data science

MATLAB is a high-performance language for technical computing. It integrates computation, visualization, and programming in an easy-to-use environment where problems and solutions are expressed in familiar mathematical notation.With Colab, users can:

- Collaborative Notebooks: Google Colab allows multiple users to collaborate on the same notebook simultaneously. You can share the notebook with colleagues, and everyone can see changes in real-time as well as contribute by writing and executing code.

- Sharing Notebooks: You can easily share Colab notebooks with others using the “Share” button at the top right corner of the interface. This generates a shareable link that allows collaborators to access the notebook.

- Integrating with Google Drive: Colab integrates seamlessly with Google Drive. You can save your notebooks directly to Google Drive and share them with others. This ensures that your work is saved securely and can be accessed from any device.

- GPU and TPU Support: Colab provides free access to GPU and TPU (Tensor Processing Unit) resources, which can significantly speed up computations for machine learning tasks.

- External Integration: You can integrate external services like GitHub, BigQuery, and more directly into your Colab notebooks to leverage data and resources.

Its user-friendly interface and robust features make it a preferred choice for data-driven decision-making.

Learn more about Colab

H2O.ai is a company that specializes in open-source artificial intelligence (AI) and machine learning platforms. The company is known for its H2O platform, which provides tools and frameworks for building machine learning models, conducting data analysis, and making predictions.

Looker is a cloud-based Business Intelligence (BI) tool that helps you explore, share, and visualize data that drive better business decisions. Looker is now a part of the Google Cloud Platform. It allows anyone in your business to analyze and find insights into your datasets quickly.In H2O.io, users can:

- Automate Model Building: Leverage automated machine learning (AutoML) for efficient model creation. Build accurate models without extensive manual intervention.

- Scale with Distributed Computing: Handle large datasets and complex computations with distributed computing. Scale up for small tasks or enterprise-level applications.

- Integrate Seamlessly: Integrate with popular data science tools like R and Python. Seamlessly work within existing data analysis workflows.

- Deploy Models in Production: Deploy trained models into real-world production environments. Implement machine learning insights to drive business outcomes.

- Access Community Support: Engage with an active user community for guidance and collaboration. Benefit from documentation and shared knowledge resources.

- H2O.ai enables users to address various data analysis tasks, deploy models in production, and tap into a supportive community for guidance.

Enjoy a user-friendly interface for building and deploying machine learning models. Benefit from an intuitive platform suitable for users with varying technical expertise.

Learn more about H2O.io

DataRobot is an automated machine learning platform that empowers organizations to efficiently build, deploy, and manage predictive models. It automates the end-to-end data science process, enabling users to harness the power of machine learning without extensive coding.

DataRobot is a machine learning platform for automating, assuring, and accelerating predictive analytics, helping data scientists and analysts build and deploy accurate predictive models in a fraction of the time required by other solutions.With DataRobot, users can:

- Automated Model Building: DataRobot automates the complex process of building machine learning models, from data preparation to feature selection, algorithm choice, and hyperparameter optimization.

- Accelerated Decision-Making: Users can quickly develop and deploy accurate predictive models, empowering them to make data-driven decisions that drive business success.

- No Coding Required : DataRobot’s intuitive interface eliminates the need for extensive coding, enabling users of varying technical backgrounds to harness the power of advanced machine learning techniques.

- Model Management: The platform offers tools to deploy models into production, monitor their performance, and iteratively improve them over time, ensuring sustained accuracy.

- Enhanced Productivity: By automating repetitive tasks, DataRobot frees up data scientists and analysts to focus on higher-value tasks, improving efficiency and innovation within organizations.

With a user-friendly interface, DataRobot accelerates model development and fosters data-driven decision-making.

Learn more about DataRobot

Google AutoML, short for Google Cloud AutoML, is a suite of machine learning products provided by Google Cloud Platform (GCP) that aims to simplify the process of building and deploying custom machine learning models.

Google Cloud AutoML is a suite of machine learning (ML) tools that enables developers and data scientists to build and deploy custom ML models with minimal effort and expertise.With Google AutoML, users can:

- Custom Models Made Easy: Google AutoML empowers users to create tailored machine learning models without extensive expertise.

- Automated Processes: It automates complex tasks like data preprocessing and model tuning, speeding up development.

- Use-Case Specialization: AutoML offers solutions for specific tasks like image classification and natural language processing.

- Integration and Scalability: Seamlessly integrates with Google Cloud services and scales for handling large datasets.

- Democratizing ML: By simplifying the process, it makes machine learning accessible to non-experts, enabling innovation across industries.

Its ability to enable users without extensive machine learning expertise to create custom models tailored to their tasks through automation and user-friendly interfaces, democratizing machine learning adoption.

Learn more about Google AutoML

Google Colab is a cloud-based platform that provides a Jupyter Notebook environment for writing and executing code, particularly in Python. It’s commonly used for data analysis, machine learning, and collaborative coding. Here’s how you can use Google Colab for collaboration and data science

Looker is a cloud-based Business Intelligence (BI) tool that helps you explore, share, and visualize data that drive better business decisions. Looker is now a part of the Google Cloud Platform. It allows anyone in your business to analyze and find insights into your datasets quickly.With Colab, users can:

- Collaborative Notebooks: Google Colab allows multiple users to collaborate on the same notebook simultaneously. You can share the notebook with colleagues, and everyone can see changes in real-time as well as contribute by writing and executing code.

- Sharing Notebooks: You can easily share Colab notebooks with others using the “Share” button at the top right corner of the interface. This generates a shareable link that allows collaborators to access the notebook.

- Integrating with Google Drive: Colab integrates seamlessly with Google Drive. You can save your notebooks directly to Google Drive and share them with others. This ensures that your work is saved securely and can be accessed from any device.

- GPU and TPU Support: Colab provides free access to GPU and TPU (Tensor Processing Unit) resources, which can significantly speed up computations for machine learning tasks.

- External Integration: You can integrate external services like GitHub, BigQuery, and more directly into your Colab notebooks to leverage data and resources.

Its user-friendly interface and robust features make it a preferred choice for data-driven decision-making.

Learn more about Colab

Amazon SageMaker is a cloud machine-learning platform that enables developers to create, train, and deploy machine-learning models in the cloud. It also enables developers to deploy ML models on embedded systems and edge-devices.

With Amazon SageMaker, users can:

- Create and manage Amazon SageMaker resources, such as notebooks, experiments, and models.

- Access and edit data stored in Amazon S3.

- Run machine learning algorithms and pipelines.

- Deploy machine learning models to production.

A user-friendly interface that makes it easy to create and manage Amazon SageMaker resources. This could include features such as a drag-and-drop interface, tooltips, and error messages that are easy to understand.

Learn more about Looker

Azure Machine Learning is a cloud-based platform from Microsoft that empowers users to build, deploy, and manage machine learning models at scale. It offers a wide range of tools, automated workflows, and integration with Azure services, making it efficient for data scientists and developers to create advanced AI solutions.

Azure Machine Learning empowers data scientists and developers to build, deploy, and manage high-quality models faster and with confidence. It accelerates time to value with industry-leading machine learning operations (MLOps), open-source interoperability, and integrated tools.With Azure ML, users can:

- Build and Train Models: Easily create machine learning models using a variety of algorithms and frameworks.

- Automate Workflows: Streamline end-to-end machine learning processes with automated pipelines.

- Deploy at Scale: Deploy models as web services for real-time predictions and scale using Azure’s cloud infrastructure.

- Collaborate Effectively: Collaborate with teams using version control, notebooks, and model sharing.

- Monitor Performance: Continuously monitor models in production for accuracy and adjust as needed.

Some Differentiating Features in this product is Integration with Azure Ecosystem,Auto ML, ML Ops, Hybrid Deployment, Pre-built AI Models, Enterprise leve Security.

Learn more about Azure AutoML RapidMiner provides data mining and machine learning procedures including: data loading and transformation (ETL), data preprocessing and visualization, predictive analytics and statistical modeling, evaluation, and deployment. RapidMiner is written in the Java programming language.

RapidMiner provides data mining and machine learning procedures including: data loading and transformation (ETL), data preprocessing and visualization, predictive analytics and statistical modeling, evaluation, and deployment. RapidMiner is written in the Java programming language.

RapidMiner is a data science platform that offers a range of tools for data preparation, machine learning, and advanced analytics. Here are some pros and cons of using RapidMiner as a data science product:

With RapidMinor, users can:- Pros:

- User-Friendly Interface: RapidMiner provides a visual interface that makes it accessible to both data scientists and business users without extensive coding knowledge.

- End-to-End Data Science: It offers a comprehensive suite of tools for data preprocessing, modeling, evaluation, and deployment, enabling users to perform end-to-end data science tasks in one platform.

- Large Collection of Algorithms: RapidMiner supports a wide variety of machine learning algorithms, making it suitable for various predictive modeling and data analysis tasks.

- Automated Machine Learning (AutoML): The AutoML functionality helps users select the best algorithms and preprocessing steps automatically, simplifying the model-building process.

- Data Visualization: The platform includes data visualization capabilities to help users explore and understand their data.

- Collaboration: RapidMiner supports collaboration features, allowing teams to work together on projects, share workflows, and improve overall productivity.

- Integration: It integrates with various data sources and platforms, making it convenient to import and export data.

- Complex Workflows: While the visual interface simplifies many tasks, complex workflows can become challenging to manage and troubleshoot.

- Resource Intensive: Large datasets or resource-intensive tasks may require a powerful system to ensure smooth processing.

- Learning Curve: Despite its user-friendly interface, learning how to effectively use the platform’s features and understand its concepts can still take time.

- Licensing Costs: RapidMiner’s licensing costs may be a concern for smaller organizations or individuals.

- Limited Customization: While it offers a wide range of tools, users with specialized requirements may find limitations in customization.

- Deployment Challenges: Transitioning from building models in RapidMiner to deploying them in production environments may require additional effort and expertise.

- Data Wrangling Complexity: Although it offers data preprocessing tools, more complex data wrangling scenarios might still require some coding knowledge.

Dataiku DSS (Data Science Studio) is a collaborative data science software platform for data professionals: data scientists, data engineers, data analysts, data architects, CRM and marketing teams.Dataiku DSS is a popular ETL tool used for data preparation, data integration, and data analysis. Dataiku is a data science and advanced analytics platform that provides tools for data preparation, machine learning, and collaborative data science workflows. Here are some pros and cons of using Dataiku as a data science product:

Pros:- End-to-End Data Science: Dataiku offers a comprehensive set of tools that cover the entire data science lifecycle, from data wrangling and preprocessing to model building, evaluation, and deployment.

- Visual Interface: Its visual interface makes it accessible to data scientists, analysts, and business users, allowing them to work collaboratively on projects.

- AutoML: Dataiku provides AutoML capabilities that automatically select and optimize machine learning models, simplifying the model selection process.

- Custom Code Integration: While offering a visual interface, Dataiku also allows users to integrate custom code, catering to advanced users’ needs.

- Data Preparation: The platform’s data preparation features streamline the process of cleaning, transforming, and structuring data for analysis.

- Scalability: Dataiku can handle large datasets and scale to meet the needs of enterprise-level projects.

- Collaboration: It supports collaboration among team members, allowing them to work together on projects, share workflows, and communicate within the platform.

- Deployment: Dataiku offers options to deploy models to production, supporting a variety of deployment environments and frameworks.

- Learning Curve: Despite its visual interface, mastering all the features and best practices of Dataiku can still require a learning curve.

- Pricing: The platform’s pricing might be a concern for smaller organizations or individuals, and pricing details are often customized based on specific needs.

- Resource Intensive: Complex analyses and large datasets might require robust hardware resources to ensure smooth performance.

- Complexity for Simple Tasks: While suitable for complex data science projects, Dataiku’s extensive features might make it more cumbersome than necessary for simpler tasks.

- Integration Challenges: Integrating Dataiku with existing systems or databases may require some technical expertise.

- Customization Limitations: While it allows custom code integration, users with very specialized requirements might still encounter limitations in customization.

- Documentation and Support: While Dataiku provides documentation and support, users might sometimes find it challenging to find specific answers to their queries.

Scikit-learn (Sklearn) is the most useful and robust library for machine learning in Python. It provides a selection of efficient tools for machine learning and statistical modeling including classification, regression, clustering and dimensionality reduction via a consistence interface in Python.

Scikit-Learn, also known as sklearn, is an open-source machine learning library for Python. It provides a wide range of tools and algorithms for machine learning tasks. Here are some pros and cons of using Scikit-Learn as a machine learning product:

Pros:- Simplicity: Scikit-Learn is designed with simplicity in mind, making it a great choice for beginners and experienced users alike. Its consistent API and well-documented functions make it easy to learn and use.

- Wide Range of Algorithms: Scikit-Learn offers a comprehensive collection of machine learning algorithms, including classification, regression, clustering, dimensionality reduction, and more.

- Integration with Other Libraries: Scikit-Learn works well with other popular Python libraries, such as NumPy, Pandas, and Matplotlib, enabling seamless integration of data manipulation, analysis, and visualization.

- Community and Resources: Being open-source, Scikit-Learn has a strong community of users and contributors. This leads to a wealth of tutorials, examples, and community support.

- Consistency: The library maintains a consistent API across different algorithms, making it easier to switch between methods and experiment with different techniques.

- Extensive Documentation: Scikit-Learn provides comprehensive documentation with examples, making it easier for users to understand the functions and apply them effectively.

- Efficiency: The library is optimized for efficiency and performance, making it suitable for working with large datasets and computationally demanding tasks.

- Limited to Python: Scikit-Learn is limited to Python, so users who prefer other programming languages may need to use different libraries.

- Simplistic Tools: While Scikit-Learn is excellent for traditional machine learning tasks, it may not cover some advanced topics, such as deep learning, that require more specialized libraries like TensorFlow or PyTorch.

- Lack of Preprocessing: While it offers basic preprocessing functions, more complex data preprocessing might require additional tools or libraries.

- Limited to Supervised Learning: Some algorithms are focused on supervised learning, so users seeking more unsupervised learning or reinforcement learning techniques may need to look elsewhere.

- Limited Built-in Visualizations: Scikit-Learn provides basic visualization tools, but more advanced visualization may require integration with other visualization libraries.

- Less Emphasis on Deep Learning: Scikit-Learn is not designed specifically for deep learning, so users interested in deep neural networks may need to consider other libraries.

Watson Studio provides the environment and tools for you to collaborately work on data to solve your business problems. You can choose the tools you need to analyze and visualize data, to cleanse and shape data, to ingest streaming data, or to create and train machine learning models.

Key features and capabilities of the platform

- Data Preparation: Watson Studio allows you to access, clean, and shape your data using a visual interface. It supports various data sources and provides tools for data wrangling.

- Data Visualization: You can create interactive data visualizations and dashboards to gain insights from your data using the integrated visualization tools.

- Jupyter Notebooks: Watson Studio integrates Jupyter Notebooks, enabling data scientists to write, execute, and share code in Python, R, and other languages.

- Machine Learning: The platform provides a collection of pre-built machine learning algorithms and models that can be customized for specific tasks. You can also build your own machine learning models using popular libraries like scikit-learn and TensorFlow. AutoAI: Watson Studio offers AutoAI, which automates the process of building machine learning models, helping you quickly find the best-performing model for your data.

- Model Deployment: You can deploy and manage machine learning models as APIs for use in applications, without requiring extensive coding. Collaboration: The platform supports collaboration among team members by allowing them to work together on projects, share code, and track changes.

- Cloud Deployment : IBM Watson Studio is available as part of IBM Cloud, which means you can leverage cloud resources to scale your data science projects as needed.

- Enterprise-Ready: The platform offers features like version control, data governance, and security measures to support enterprise-level data science initiatives.

Its user-friendly interface and robust features make it a preferred choice for data-driven decision-making.

Learn more about IBM Watson Studio"Data Science Visions: Top Trends"

Cloud Data Ecosystems

Cloud data ecosystems are becoming increasingly popular as organizations look for ways to manage and analyze their data more efficiently and effectively. A cloud data ecosystem is a collection of cloud-based tools and services that work together to provide a complete solution for data management.

Some of the trending cloud data ecosystems in 2023 include:

Google Cloud Platform (GCP): GCP offers a wide range of cloud data services, including BigQuery, Cloud Dataproc, and Cloud Dataflow.

Amazon Web Services (AWS): AWS is another major player in the cloud computing market, and it offers a variety of data services, including Amazon Redshift, Amazon EMR, and Amazon Kinesis.

Microsoft Azure: Azure is Microsoft's cloud computing platform, and it offers a number of data services, including Azure Data Lake Storage, Azure Databricks, and Azure Machine Learning.

IBM Cloud: IBM Cloud offers a wide range of cloud services, including IBM Cloud Pak for Data, IBM Cloud Data Science Workbench, and IBM Watson Studio.

Oracle Cloud Infrastructure (OCI): OCI is Oracle's cloud computing platform, and it offers a variety of data services, including Oracle Autonomous Data Warehouse, Oracle Cloud Dataproc, and Oracle Dataflow.

These are just a few of the many cloud data ecosystems available. The best ecosystem for a particular organization will depend on its specific needs and requirements.

Here are some of the key trends driving the growth of cloud data ecosystems:

The increasing volume and velocity of data: The amount of data being generated is growing exponentially, and this is putting a strain on traditional data management systems. Cloud data ecosystems can help organizations to store, process, and analyze this data more efficiently.

The growing need for agility and scalability: Businesses need to be able to adapt quickly to changing market conditions, and cloud data ecosystems can help them to do this by providing scalable and flexible solutions.

The increasing demand for security and compliance: Cloud data ecosystems can help organizations to protect their data and comply with regulations.

The rise of artificial intelligence and machine learning: AI and machine learning are becoming increasingly important for businesses, and cloud data ecosystems can provide the infrastructure and tools needed to develop and deploy these technologies.

Overall, cloud data ecosystems are a powerful tool that can help organizations to manage and analyze their data more efficiently and effectively. As the trends mentioned above continue to drive the growth of data, cloud data ecosystems are likely to become even more popular in the years to come.

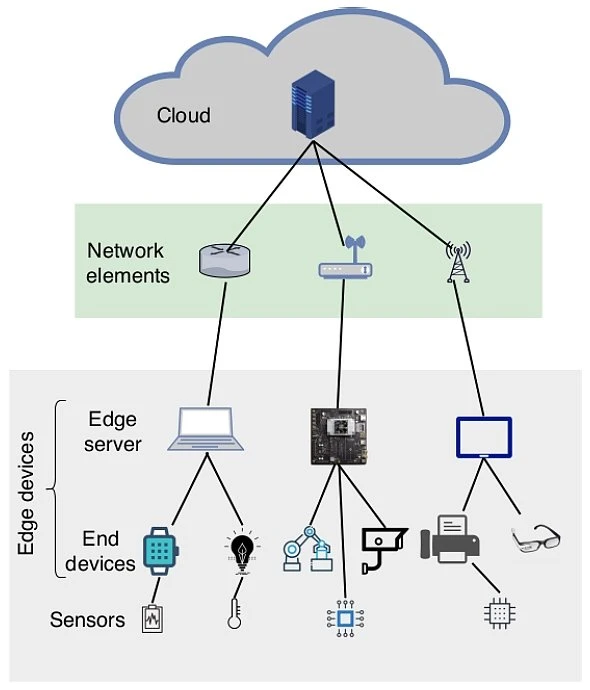

Edge AI

This enables real-time insights, pattern detection, and data privacy. Edge AI is becoming increasingly important as the volume and velocity of data generated by IoT devices continues to grow.

Edge AI is a rapidly growing field, and there are many exciting trends to watch in 2023. Here are a few of the most promising:

The rise of 5G: 5G will enable faster and more reliable connectivity, which will be essential for edge AI applications that require real-time data processing.

The development of new hardware accelerators: New hardware accelerators, such as GPUs and FPGAs, are being developed specifically for edge AI applications. These accelerators can help to improve the performance and efficiency of edge AI models.

The growth of open-source frameworks: Open-source frameworks, such as TensorFlow and PyTorch, are making it easier for developers to build and deploy edge AI applications.

The increasing adoption of edge AI in the enterprise: Edge AI is being adopted by a growing number of enterprises for applications such as predictive maintenance, fraud detection, and anomaly detection.

The development of new edge AI applications: Edge AI is being used in a wide range of new applications, such as self-driving cars, smart homes, and industrial automation.

These are just a few of the trends that are shaping the future of edge AI. As the technology continues to develop, we can expect to see even more exciting applications of edge AI in the years to come.

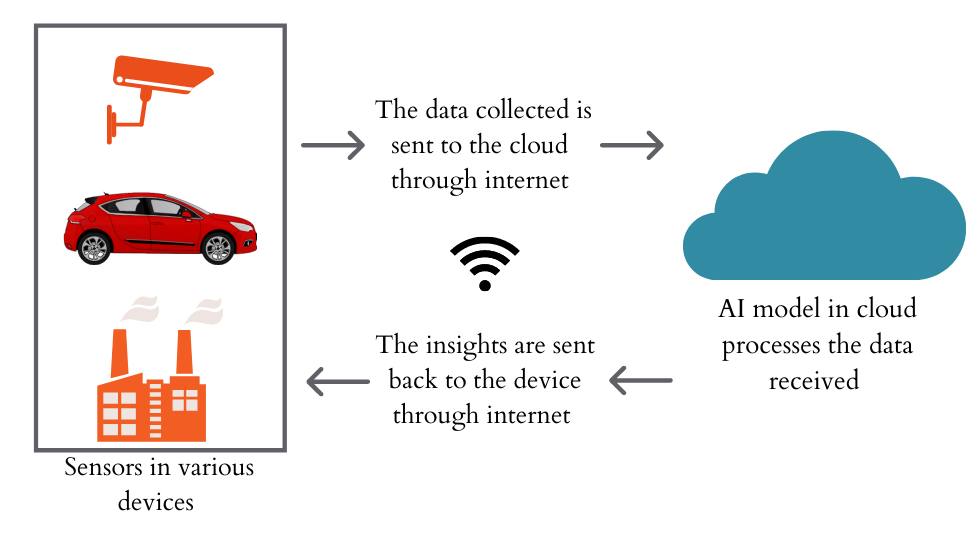

How Does AI Works?

Here are some specific examples of how edge AI is being used in different industries:

Retail: Edge AI is being used in retail to improve customer service, personalize recommendations, and prevent fraud. For example, edge AI can be used to analyze customer behavior in stores, identify fraudulent transactions, and recommend products that are likely to be of interest to a particular customer.

Healthcare: Edge AI is being used in healthcare to improve diagnosis, treatment, and patient monitoring. For example, edge AI can be used to analyze medical images, identify diseases, and track patient vital signs.

Transportation: Edge AI is being used in transportation to improve safety, efficiency, and sustainability. For example, edge AI can be used to monitor traffic conditions, detect accidents, and optimize routes.

Energy: Edge AI is being used in energy to improve grid efficiency, reduce emissions, and manage demand. For example, edge AI can be used to monitor power usage, detect outages, and optimize energy delivery.

As the technology continues to develop, we can expect to see even more innovative and groundbreaking applications in the years to come.

Responsible AI

Responsible AI is the ethical and social dimension of AI that covers aspects such as value, risk, trust, transparency, accountability, regulation, and governance. As AI becomes more pervasive, it is important to ensure that it is used in a responsible and ethical way.

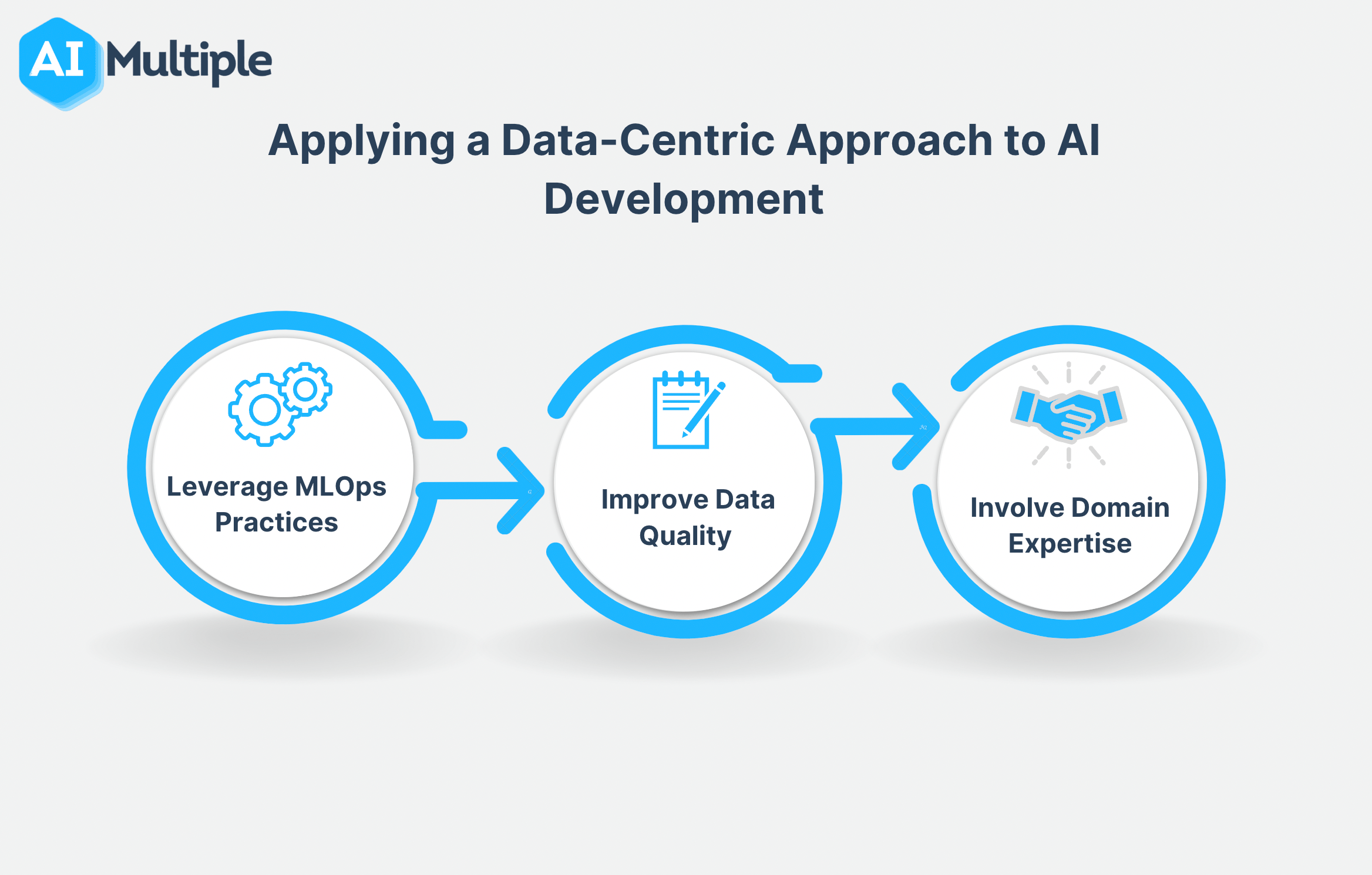

Data-Centric AI

Data-Centric AI is a new approach to AI that focuses on the data itself, rather than on the algorithms or models used to process the data. This approach is gaining popularity as it offers a more scalable and flexible way to build AI systems.

Here are some of the trending data centric AI topics:

Data quality: This is the most important factor for data-centric AI. If the data is not of high quality, the AI system will not be able to make accurate predictions or decisions.

Data collection: This is the process of gathering data from a variety of sources. The data should be representative of the population or problem that the AI system is trying to solve.

Data preparation: This is the process of cleaning and formatting the data so that it can be used by the AI system. This includes removing noise, correcting errors, and standardizing the data.

Data labeling: This is the process of assigning labels to the data. This is important for supervised learning algorithms, which require labeled data to learn from.

Data augmentation: This is the process of artificially increasing the amount of data by creating new data points from existing data points. This can be done by applying transformations to the data, such as cropping, flipping, or rotating.

Data sharing: This is the process of making data available to other researchers and developers. This can help to improve the quality of AI systems by making it possible to train them on more data.

Data governance: This is the process of managing data in a way that ensures its quality, security, and privacy. This includes establishing policies and procedures for collecting, storing, processing, and sharing data.

These are just a few of the trending data centric AI topics. As AI technology continues to develop, it is important to stay up-to-date on the latest trends and best practices for ensuring that data is used effectively in AI systems.

Here are some of the benefits of data-centric AI:

Improved accuracy: Data-centric AI systems can make more accurate predictions and decisions because they are trained on more data.

Increased efficiency: Data-centric AI systems can be more efficient because they can learn from data without human intervention.

Reduced costs: Data-centric AI systems can reduce costs by automating tasks that would otherwise be done by humans.

Enhanced decision-making: Data-centric AI systems can help businesses make better decisions by providing insights into data.

Improved customer experience: Data-centric AI systems can improve the customer experience by providing personalized recommendations and services.

By focusing on the data, data-centric AI can help businesses to improve their operations and make better decisions.

Accelerated AI Investment

The investment in AI is accelerating, as businesses and governments recognize the potential of AI to transform their industries. This investment is driving innovation in AI, and is leading to the development of new and more powerful AI systems.

Here are some of the trending AI accelerated investment topics:

The rise of cloud computing: Cloud computing has made it easier and more affordable for businesses to adopt AI technologies.

The development of new hardware accelerators: New hardware accelerators, such as GPUs and FPGAs, are making it possible to train and deploy AI models more quickly and efficiently.

The growth of open-source AI frameworks: Open-source AI frameworks, such as TensorFlow and PyTorch, are making it easier for developers to build and deploy AI applications.

The increasing adoption of AI by businesses: Businesses are increasingly adopting AI technologies to improve their operations and make better decisions.

The development of new AI applications: AI is being used in a wide range of new applications, such as self-driving cars, healthcare, and finance.

These are just a few of the trending AI accelerated investment topics. As AI technology continues to develop, it is likely that we will see even more investment in this area.

Here are some of the reasons why AI investment is accelerating:

The potential of AI to revolutionize many industries: AI has the potential to revolutionize many industries, such as healthcare, manufacturing, and transportation. This has led to increased investment in AI technologies by businesses and governments.

The falling cost of AI technologies: The cost of AI technologies has been falling, making them more affordable for businesses and governments to adopt.

The increasing availability of data: The availability of data is essential for training AI models. As the amount of data available continues to grow, it is likely that we will see even more investment in AI technologies.

The growing talent pool: There is a growing talent pool of AI experts, which is making it easier for businesses to adopt AI technologies.

The increasing regulatory support: Governments are increasingly providing regulatory support for AI technologies. This is making it more attractive for businesses to invest in AI.

By investing in AI, businesses and governments can gain a competitive advantage and improve their operations.

Here are some other trends that are worth keeping an eye on:

Low/no-code solutions are making it easier for people without a background in data science to build and deploy data science models. This is opening up the field of data science to a wider range of people and organizations.

Synthetic data is artificial data that is created to mimic real-world data. This can be used to train machine learning models when real-world data is not available or is too expensive to collect.

Explainable AI is a new field of research that focuses on developing AI systems that can explain their decisions in a way that is understandable by humans. This is important for ensuring that AI systems are used in a responsible and ethical way.

AI is being used to solve a wide range of social and environmental problems, such as climate change, poverty, and disease. This is an exciting area of research, and it is likely to continue to grow in importance in the years to come.